Training Custom vision

So I had this idea to build an app that can Identify specific dogs. An Ideal outcome would be to point a mobile device at a dog, and tell you what their names are. Basically facial recognition for dogs. I soon realized that might be a stretch for the first iteration. So lets just start with Breeds.

After doing some research, I found there is an existing dataset that is used by everyone doing this. It is the Stanford Dogs Dataset. So that is what we will use for our training data. The dataset is quite extensive with data for 120 breeds and over 20,000 images. So beware large downloads ahead!

I also found a bunch of walkthroughs / tutorials that include all the Python code you can stand to accomplish this with just about every AI model out there. However I want and Love c# and Azure Cognitive Services. So that is what we are going with.

Download the data

The first thing that you will need to do is download the data. Head over to http://vision.stanford.edu/aditya86/ImageNetDogs/ and grab the Images file and the Annotations File. Extract both files into the same directory. In other words you will have an image folder and an annotations folder in the same root folder.

Clean the Data

Next we need to do some data cleansing. There are a few of the annotations in that dataset where the file and folder names for the images are set to ‘%s’. I have a feeling that with the original Python scripts that it might not be an issue. However we need to fix it first. I have a GIST that you can copy and paste into a new console app that will do this for you.

https://gist.github.com/Corneliuskruger/dc7a52650e24f47d4d87b164e51de16f

Now that the data is clean, we can start the fun part.

Create your Custom Vision Project

So when creating your project, you have a few options to choose from. My initial reaction was to use the Object Detection type. As my use case would be to take a photo of a bunch of dogs, and have them recognized. However I ran into some blockers going this way.

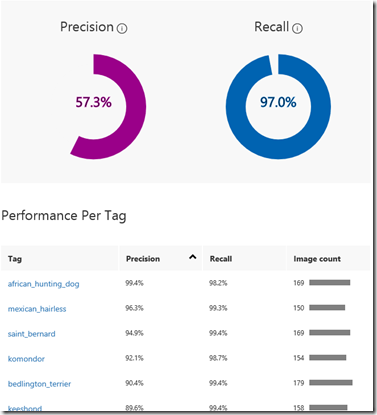

The first was that after I trained my project with 10000 images, the recall for my model was only 36%. WHAAAT? ( and only 4.6% for the compact Model). The second was that Cognitive Services only allows you to train with a maximum of 10000 images. I contacted Azure support and they said that they are not able to up that limit at the time of. They also suggested that I go with a Classification model.

Maybe I could use two services. The first to detect the dogs, then crop the photo to extract the single dog, and then use the breed classifier to get the breed. But I will optimize it that way later.

So back to creating the project. I ended up using a Multilabel Classification Model using the General domain.

Note that I also used a S0 Pricing Tier as the F0 only allows you to create 50 Tags and the dataset that we are using contains 120 dog breeds. But you can start with 50 if you want to.

Create a Console App

You can access all the code here: https://gist.github.com/Corneliuskruger/348ca17ebf8c186727d2ce2ef7f9196c

- From Visual Studio Create a new .Net Core Console app.

- Add the Microsoft.Azure.CognitiveServices.Vision.CustomVision.Training to the project (Note this is still in pre-release so make sure you include it in you results)

- Now in you program class, create variables for your training endpoint and your api key. You can get both on the settings page in the Custom Vision dashboard.

12private const string SouthCentralUsEndpoint = "https://southcentralus.api.cognitive.microsoft.com";private const string trainingKey = "<My Training Key>";

Not that the endpoint URL should not include the version and type. I.e. /v2.2/Training - Then create your Training Client

12345CustomVisionTrainingClient trainingApi = new CustomVisionTrainingClient(){ApiKey = trainingKey,Endpoint = SouthCentralUsEndpoint};

- Next we get the project that we created. You can also create a new project in code here, but I chose not to.

1234var projects = trainingApi.GetProjects();var project = projects.FirstOrDefault(p => p.Name.ToLower() == "dogbreeds");if (project == null)return;

- And then we load the existing Tags. This makes it easy to re-run the upload if anything fails.

1var tags = trainingApi.GetTags(project.Id);

That pretty much takes care of the Custom Vision setup. Next lets get the data.

- So lets ask the user to specify the directory where the data is located and validate the input. We will assume that the annotation and images directories exist at this point.

1234567891011Console.WriteLine("Enter the directory where the data files are extracted");var rootDir = Console.ReadLine();if (string.IsNullOrEmpty(rootDir) || !Directory.Exists(rootDir)){Console.WriteLine("Invalid Directory");Console.ReadLine();return;}var imageDirectory = Path.Combine(rootDir, "images");var annotationDirectory2 =Path.Combine(rootDir, "annotation");

- The next part is getting a little into the weeds. But the basics are that we iterate through the directories in the annotations folder. Then we read each file in the directory. We extract the path for the image, and the dimensions of the binding boxes for the objects. We then create a ImageFileCreateEntry object to send to Cognitive Services.

1234567891011121314151617181920...//Read the image information from the xml filevar imageFileName = root.Elements("filename").FirstOrDefault().Value + ".jpg";var imageFolder = root.Elements("folder").FirstOrDefault().Value;...//Read the Tag information from the xml file//Add the Tag if it does not exist to the apitag = trainingApi.CreateTag(project.Id, tagName.ToLower());tags.Add(tag);//Read the binding box information from the xml file...var region = new Region(tag.Id, left, top, width, height);List<Region> regions = new List<Region>();regions.Add(region);var fileEntry = new ImageFileCreateEntry(imageFileName, File.ReadAllBytes(imagePath), null, regions);imageFileEntries.Add(fileEntry);

- Then the last step is to assemble a package of data and upload it. Note that there is a limit of how many images you can upload at a time. Currently it is 65. I kick off the upload after 60 images to be on the safe side. But you can change that to suite your needs.

12trainingApi.CreateImagesFromFiles(project.Id, new ImageFileCreateBatch(imageFileEntries));imageFileEntries.Clear();

Train your model

Now that you have all the building blocks, run the App. It will take a while to upload all that data. In the code I tried to keep things simple, but with a little bit of async magic you should be able to make it much more performant. However that is not the object of the article.

Now you can head over to the Custom Vision portal again. You should see the uploaded images and tags associated with them. Look through a few and do some sanity checks. When you are happy with the data, the next step is to Train the model. Hit the green “Train” button. Once done you will see a graph like this to help you understand how accurate the predictions for the model is.

Go ahead and run some Quick Tests. I tested it some and the results seemed pretty good.

Next Steps

In the next article I am going to show how to use the prediction URL from a Xamarin Application. The plan is to do some real time analysis of video.

References:

The code on GitHub:

https://gist.github.com/Corneliuskruger/348ca17ebf8c186727d2ce2ef7f9196c

Go here and download the Images and annotations.

http://vision.stanford.edu/aditya86/ImageNetDogs/

Here is the tutorial to create the app that is used to train the model:

https://docs.microsoft.com/en-us/azure/cognitive-services/Custom-Vision-Service/csharp-tutorial-od